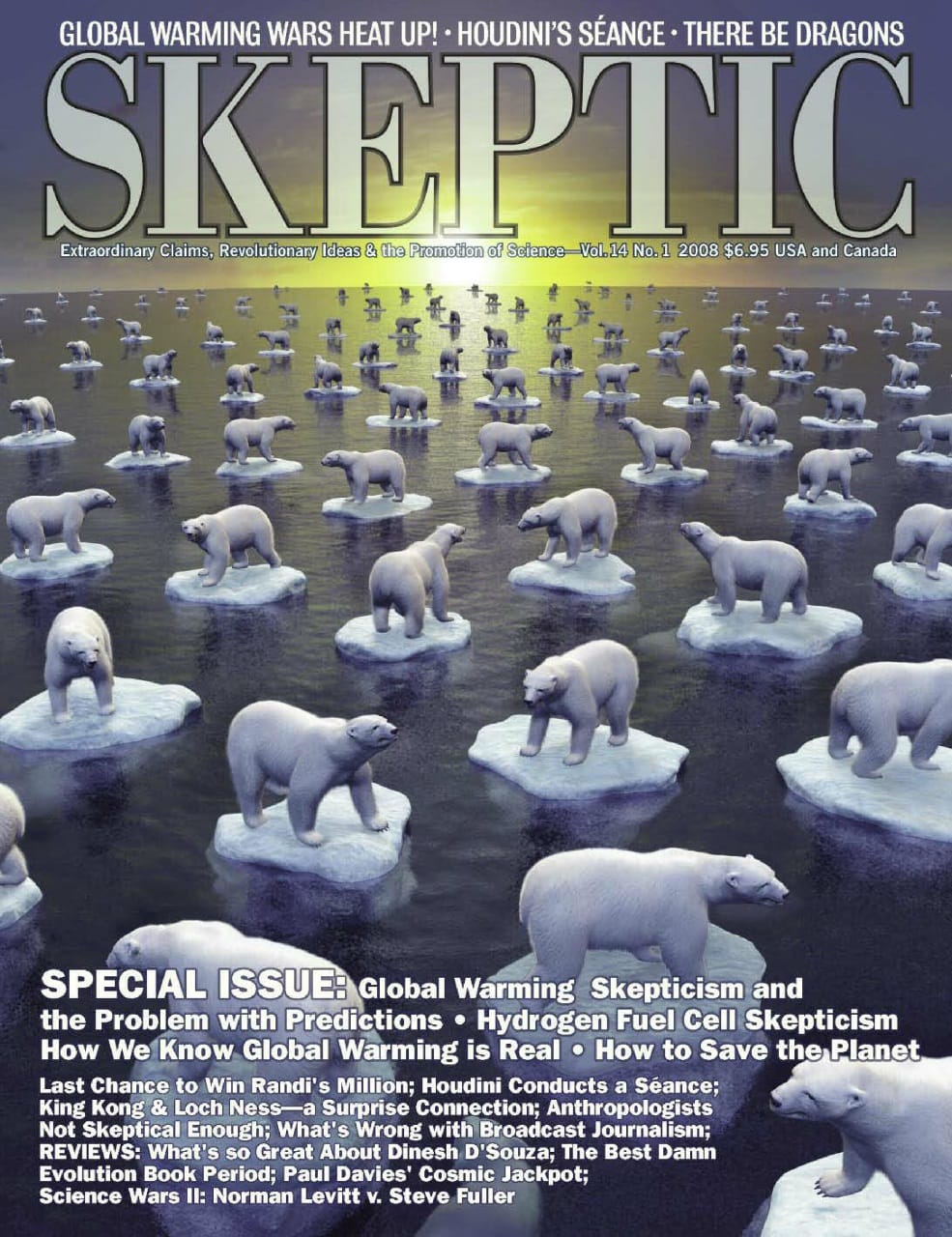

ALL ISSUES - Global Warming Predictions

What's Inside

Volume 14 Number 1

- A Climate of Belief

The claim that anthropogenic CO₂ is responsible for the current warming of Earth's climate is scientifically insupportable because climate models are unreliable

by Patrick Frank - How We Know Global Warming is Real

The Science Behind Human-Induced Climate Change

by Tapio Schneider - Turning Around by 2020

How to Solve the Global Warming Problem

by William Calvin - The Hydrogen Economy

Savior of Humanity or an Economic Black Hole?

by Alice Friedemann - No Victory in the Textbook Wars

by Glenn Branch - SkepDoc’s Ill Tone

by Ralph G. Walton, M.D. - A Reconstruction of Houdini’s Famous Show Exposing Séance Fraud

by Steven E. Rivkin - Journalist Bites Reality!

How Broadcast Journalism is Flawed in Such a Fundamental Way That Its Utility as a Tool for Informing Viewers is Almost Nil

by Steve Salerno - In Belief We Trust

Why Anthropologists Abandon Skepticism When They Hear Claims About Supernatural Beliefs

by Craig T. Palmer, Kathryn Coe, and Reed L. Wadley - Last Chance to Win the Million-Dollar Challenge; Magnetic Therapy

by James Randi - Detox Quackery: From Footbaths to Fetishism

by Harriet Hall, M.D. - What the Fossils Say — In Spades!

Evolution: What the Fossils Say and Why It Matters

by Donald R. Prothero, reviewed by Tim Callahan - What’s So Great About Dinesh D’Souza?

What’s So Great About Christianity

by Dinesh D’Souza, reviewed by Tim Callahan - Jackpot or Crackpot?

Cosmic Jackpot: Why Our Universe is Just Right for Life

by Paul Davies, reviewed by Sid Deutsch - The Painful Elaboration of the Fatuous

Science v. Religion: Intelligent Design and the Problem of Evolution

by Steve Fuller, reviewed by Norman Levitt

Think a friend would enjoy this? Send it their way!