On October 17, 2005 the talk show host and comedian Stephen Colbert introduced the word “truthiness” in the premier episode of his show The Colbert Report:1 “We’re not talking about truth, we’re talking about something that seems like truth— the truth we want to exist.”2 Since then the word has become entrenched in our everyday vocabulary but we’ve largely lost Colbert’s satirical critique of “living in a post-truth world.” Truthiness has become our truth. Kellyanne Conway opened the door to “alternative facts”3 while Oprah Winfrey exhorted you to “speak your truth.”4 And the co-founder of Skeptic magazine, Michael Shermer, has begun to regularly talk to his podcast guests about objective external truths and subjective internal truths, inside of which are historical truths, political truths, religious truths, literary truths, mythical truths, scientific truths, empirical truths, narrative truths, and cultural truths.5 It is an often-heard complaint to say that we live in a post-truth world, but what we really have is far too many claims for it. Instead, we propose that the vital search for truth is actually best continued when we drop our assertions that we have something like an absolute Truth with a capital T.

Why is that? Consider one of our friends who is a Young Earth creationist. He believes the Bible is inerrant. He is convinced that every word it contains, including the six days of creation story of the universe, is Truth (spelled with a capital T because it is unquestionably, eternally true). From this position, he has rejected evidence brought to him from multiple disciplines that all converge on a much older Earth and universe. He has rejected evidence from fields such as biology, paleontology, astronomy, glaciology, and archeology, all of which should reduce his confidence in the claim that the formation of the Earth and every living thing on it, together with the creation of the sun, moon, and stars, all took place in literally six Earth days. Even when it was pointed out to him that the first chapter of Genesis mentions liquid water, light, and every kind of vegetation before there was a sun or any kind of star whatsoever, he claimed not to see a problem. His reply to such doubts is to simply say, “with God, all things are possible.”6

Lacking any uncertainty about the claim that “the Bible is Truth,” this creationist has only been able to conclude two things when faced with tough questions: (1) we are interpreting the Bible incorrectly, or (2) the evidence that appears to undermine a six-day creation is being interpreted incorrectly. These are inappropriately skeptical responses, but they are the only options left to someone who has decided beforehand that their belief is Truth. And, importantly, we have to admit that this observation could be turned back on us too. As soon as we become absolutely certain about a belief—as soon as we start calling something a capital “T” Truth—then we too become resistant to any evidence that could be interpreted as challenging it. After all, we are not absolutely certain that the account in Genesis is false. Instead, we simply consider it very, very unlikely, given all of the evidence at hand. We must keep in mind that we sample a tiny sliver of reality, with limited senses that only have access to a few of possibly many dimensions, in but one of quite likely multiple universes. Given this situation, intellectual humility is required.

Some history and definitions from philosophy are useful to examine all of this more precisely. Of particular relevance is the field of epistemology, which studies what knowledge is or can be. A common starting point is Plato’s definition of knowledge as justified true belief (JTB).7 According to this JTB formulation, all three of those components are necessary for our notions or ideas to rise to the level of being accepted as genuine knowledge as opposed to being dismissible as mere opinion. And in an effort to make this distinction clear, definitions for all three of these components have been developed over the ensuing millennia. For epistemologists, beliefs are “what we take to be the case or regard as true.”8 For a belief to be true, it doesn’t just need to seem correct now; “most philosophers add the further constraint that a proposition never changes its truth-value in space or time.”9 And we can’t just stumble on these truths; our beliefs require some reason or evidence to justify them.10

Readers of Skeptic will likely be familiar with skeptical arguments from Agrippa (the problem of infinite regress11), David Hume (the problem of induction12), Rene Descartes (the problem of the evil demon13), and others that have chipped away at the possibility of ever attaining absolute knowledge. In 1963, however, Edmund Gettier fully upended the JTB theory of knowledge by demonstrating—in what has come to be called “Gettier problems”14—that even if we managed to actually have a justified true belief, we may have just gotten there by a stroke of good luck. And the last 60 years of epistemology have shown that we can seemingly never be certain that we are in receipt of such good fortune.

This philosophical work has been an effort to identify an essential and unchanging feature of the universe—a perfectly justified truth that we can absolutely believe in and know. This Holy Grail of philosophy surely would be nice to have, but it makes sense that we don’t. Ever since Darwin demonstrated that all of life could be traced back to the simplest of origins, it has slowly become obvious that all knowledge is evolving and changing as well. We don’t know what the future will reveal and even our most unquestioned assumptions could be upended if, say, we’ve actually been living in a simulation all this time, or Descartes’ evil demon really has been viciously deluding us. It only makes sense that Daniel Dennett titled one of his recent papers, “Darwin and the Overdue Demise of Essentialism.”15

So, what is to be done after this demise of our cherished notions of truth, belief, and knowledge? Hold onto them and claim them anyway, as does the creationist? No. That path leads to error and intractable conflict. Instead, we should keep our minds open, and adjust and adapt to evidence as it becomes available. This style of thinking has become formalized and is known as Bayesian reasoning. Central to Bayesian reasoning is a conditional probability formula that helps us revise our beliefs to be better aligned with the available evidence. The formula is known as Bayes’ theorem. It is used to work out how likely something is, taking into account both what we already know as well as any new evidence. As a demonstration, consider a disease diagnosis, derived from a paper titled, “How to Train Novices in Bayesian Reasoning:”

10 percent of adults who participate in a study have a particular medical condition. 60 percent of participants with this condition will test positive for the condition. 20 percent of participants without the condition will also test positive. Calculate the probability of having the medical condition given a positive test result.16

Most people, including medical students, get the answer to this type of question wrong. Some would say the accuracy of the test is 60 percent. However, the answer must be understood in the broader context of false positives and the relative rarity of the disease.

Simply putting actual numbers on the face of these percentages will help you visualize this. For example, since the rate of the disease is only 10 percent, that would mean 10 in 100 people have the condition, and the test would correctly identify six of these people. But since 90 of the 100 people don’t have the condition, yet 20 percent of them would also receive a positive test result, that would mean 18 people would be incorrectly flagged. Therefore, 24 total people would get positive test results, but only six of those would actually have the disease. And that means the answer to the question is only 25 percent. (And, by the way, a negative result would only give you about 95 percent likelihood that you were in the clear. Four of the 76 negatives would actually have the disease.)

Now, most usages of Bayesian reasoning won’t come with such detailed and precise statistics. We will very rarely be able to calculate the probability that an assertion is correct by using known weights of positive evidence, negative evidence, false positives, and false negatives. However, now that we are aware of these factors, we can try to weigh them roughly in our minds, starting with the two core norms of Bayesian epistemology: thinking about beliefs in terms of probability and updating one’s beliefs as conditions change.17 We propose it may be easier to think in this Bayesian way using a modified version of a concept put forward by the philosopher Andy Norman, called Reason’s Fulcrum.18

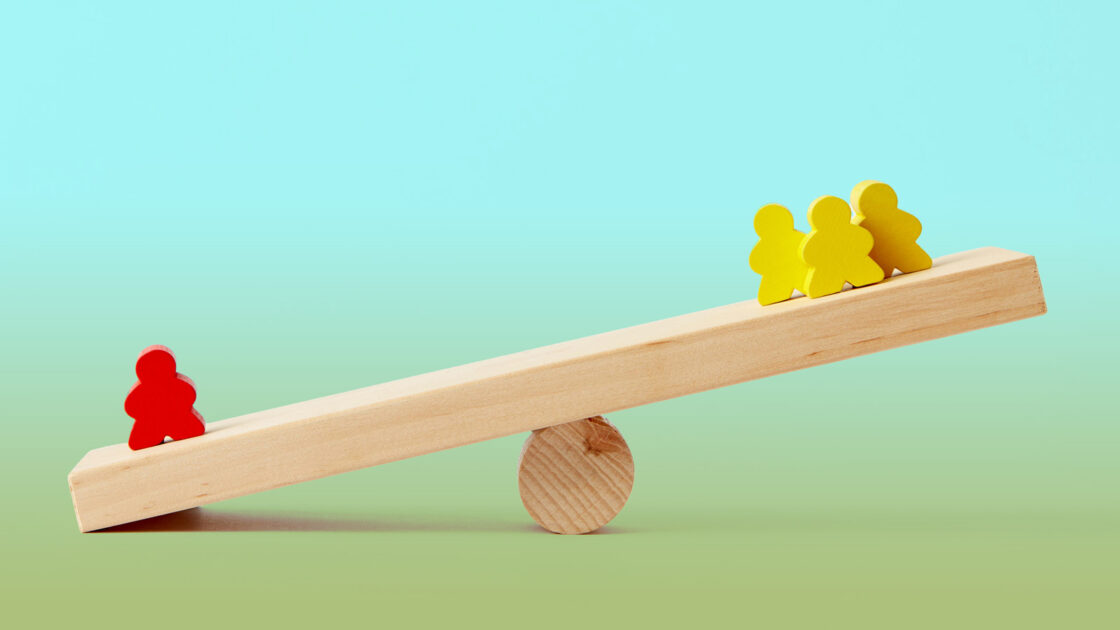

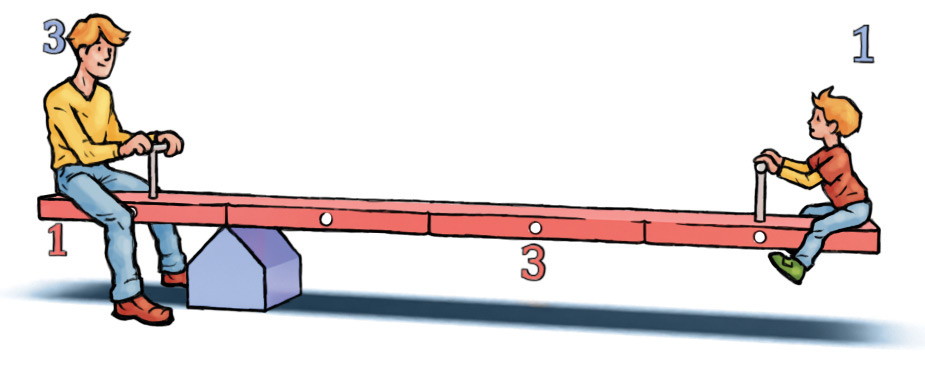

Figure 1. A Simple Lever. Balancing a simple lever can be achieved by moving the fulcrum so that the ratio of the beam is the inverse of the ratio of mass. Here, an adult who is three times heavier than the child is balanced by giving the child three times the length of beam. The mass of the beam is ignored. Illustrations in this article by Jim W.W. Smith

Like Bayes, Norman asserts that our beliefs ought to change in response to reason and evidence, or as David Hume said, “a wise man proportions his belief to the evidence.”19 These changes could be seen as the movement of the fulcrum lying under a simple lever. Picture a beam or a plank (the lever) with a balancing point (the fulcrum) somewhere in the middle, such as a playground teeter-totter. As in Figure 1, you can balance a large adult with a small child just by positioning the fulcrum closer to the adult. And if you know their weight, then the location of that fulcrum can be calculated ahead of time because the ratio of the beam length on either side of the fulcrum is the inverse of the ratio of mass between the adult and child (e.g., a three times heavier person is balanced by a distance having a ratio of 1:3 units of distance).

If we now move to the realm of reason, we can imagine substituting the ratio of mass between an adult and child by the ratio of how likely the evidence is to be observed between a claim and its counterclaim. Note how the term in italics captures not just the absolute quantity of evidence but the relative quality of that evidence as well. Once this is considered, then the balancing point at the fulcrum gives us our level of credence in each of our two competing claims.

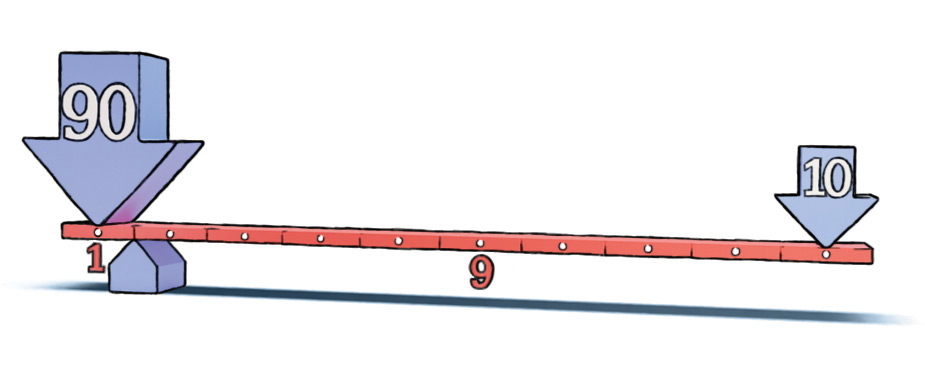

Figure 2. Ratio of 90–10 for People Without–With the Condition. A 10 percent chance of having a condition gives a beam ratio of 1:9. The location of the fulcrum shows the credence that a random person should have about their medical status.

To see how this works for the example previously given about a test for a medical condition, we start by looking at the balance point in the general population (Figure 2). Not having the disease is represented by 90 people on the left side of the lever, and having the disease is represented by 10 people on the right side. This is a ratio of 9:1. So, to get our lever to balance, we must move the fulcrum so that the length of the beam on either side of the balancing point has the inverse ratio of 1:9. This, then, is the physical depiction of a 10 percent likelihood of having the medical condition in the general population. There are 10 units of distance between the two populations and the fulcrum is on the far left, 1 unit away from all the negatives.

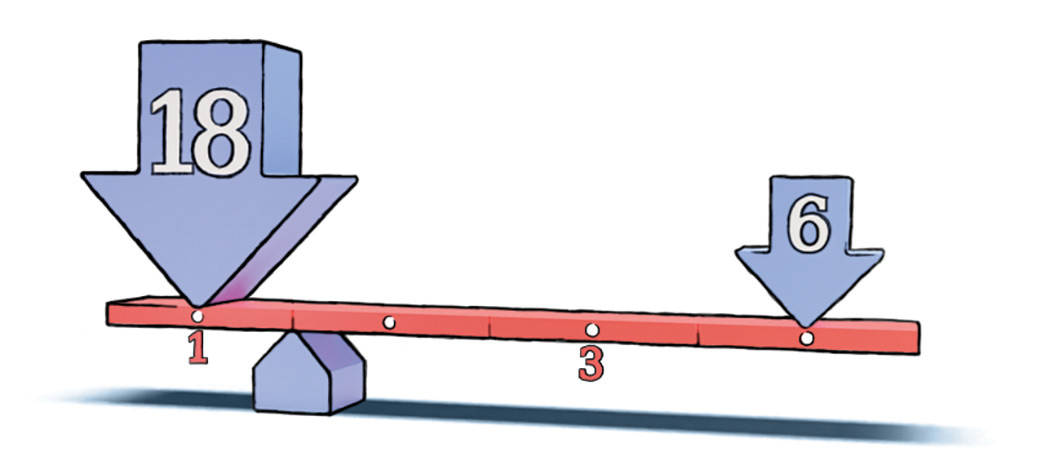

Figure 3. Ratio of 18 False Positives to 6 True Positives. A 1 to 3 beam ratio illustrates a 25 percent chance of truly having this condition. The location of the fulcrum shows the proper level of credence for someone if they receive a positive test.

Next, we want to see the balance point after a positive result (Figure 3). On the left: the test has a 20 percent false positive rate, so 18 of the 90 people stay on our giant seesaw even though they don’t actually have the condition. On the right: 60 percent of the 10 people who have the condition would test positive, so this leaves six people. Therefore, the new ratio after the test is 18:6, or 3:1. This means that in order to restore balance, the fulcrum must be shifted to the inverse ratio of 1:3. There are now four total units of distance between the left and right, and the fulcrum is 1 unit from the left. So, after receiving a positive test result, the probability of having the condition (being in the group on the right) is one in four or 25 percent (the portion of beam on the left). This confirms the answer we derived earlier using abstract mathematical formulas, but many may find the concepts easier to grasp based on the visual representation.

To recap, the position of the fulcrum under the beam is the balancing point of the likelihood of observing the available evidence for two competing claims. This position is called our credence. As we become aware of new evidence, our credence must move to restore a balanced position. In the example above, the average person in the population would have been right to hold a credence of 10 percent that they had a particular condition. And after getting a positive test, this new evidence would shift their credence, but only to a likelihood of 25 percent. That’s worse for the person, but actually still pretty unlikely. Of course, more relevant evidence in the future may shift the fulcrum further in one direction or another. That is the way Bayesian reasoning attempts to wisely proportion one’s credence to the evidence.

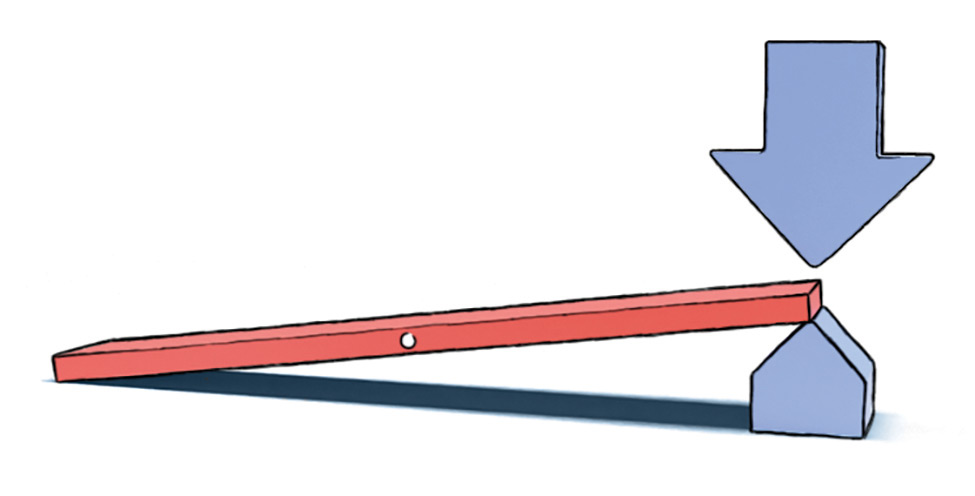

Figure 4. Breaking Reason’s Fulcrum. Absolute certainty makes Bayes’ theorem unresponsive to evidence in the same way that a simple lever is unresponsive to mass when it becomes a ramp.

What about our Young Earth creationist friend? When using Bayes’ theorem, the absolute certainty he holds starts with a credence of zero percent or 100 percent and always results in an end credence of zero percent or 100 percent, regardless of what any possible evidence might show. To guard against this, the statistician Dennis Lindley proposed “Cromwell’s Rule,” based on Oliver Cromwell’s famous 1650 quip: “I beseech you, in the bowels of Christ, think it possible that you may be mistaken.”20 This rule simply states that you should never assign a probability of zero percent or 100 percent to any proposition. Once we frame our friend’s certainty in the Truth of biblical inerrancy as setting his fulcrum to the extreme end of the beam, we get a clear model for why he is so resistant to counterevidence. Absolute certainty breaks Reason’s Fulcrum. It removes any chance for leverage to change a mind. When beliefs reach the status of “certain truth” they simply build ramps on which any future evidence effortlessly slides off (Figure 4).

So far, this is the standard way of treating evidence in Bayesian epistemology to arrive at a credence. The lever and fulcrum depictions provide a tangible way of seeing this, which may be helpful to some readers. However, we also propose that this physical model might help with a common criticism of Bayesian epistemology. In the relevant academic literature, Bayesians are said to “hardly mention” sources of knowledge, the justification for one’s credence is “seldom discussed,” and “Bayesians have hardly opened their ‘black box’, E, of evidence.”21 We propose to address this by first noting it should be obvious from the explanations above that not all evidence deserves to be placed directly onto the lever. In the medical diagnosis example, we were told exactly how many false negatives and false positives we could expect, but this is rarely known. Yet, if ten drunken campers over the course of a few decades swear they saw something that looked like Bigfoot, we would treat that body of evidence differently than if it were nine drunken campers and footage from one high-definition camera of documentarians working for the BBC. How should we depict this difference between the quality of evidence versus the quantity of evidence?

We don’t yet have firm rules or “Bayesian coefficients” for how to precisely treat all types of evidence, but we can take some guidance from the history of the development of the scientific method. Evidential claims can start with something very small, such as one observation under suspect conditions given by an unreliable observer. In some cases, perhaps that’s the best we’ve got for informing our credences. Such evidence might feel fragile, but…who knows? The content could turn out to be robust. How do we strengthen it? Slowly, step by step, we progress to observations with better tools and conditions by more reliable observers. Eventually, we’re off and running with the growing list of reasons why we trust science: replication, verification, inductive hypotheses, deductive predictions, falsifiability, experimentation, theory development, peer review, social paradigms, incorporating a diversity of opinions, and broad consensus.22

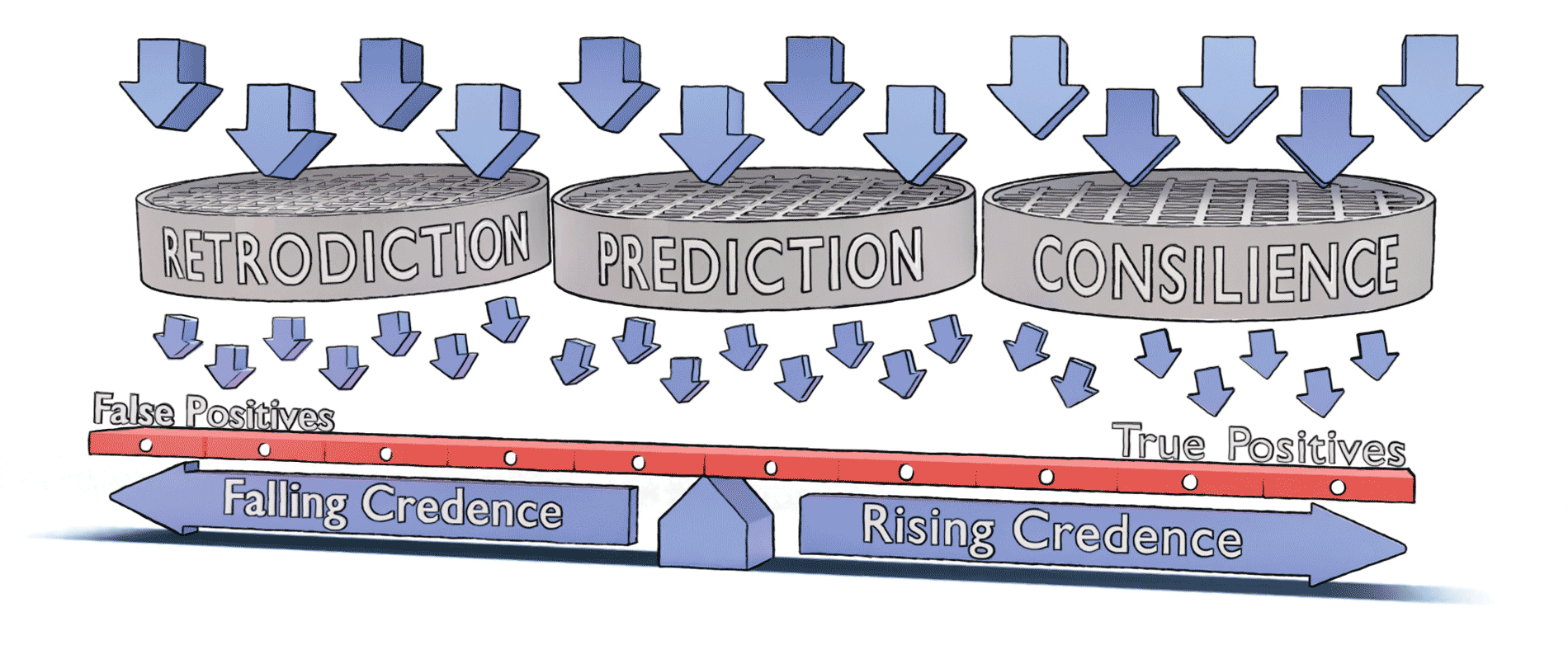

We can also bracket these various knowledgegenerating activities into three separate categories for theories. The simplest type of theory we have explains previous evidence. This is called retrodiction. All good theories can explain the past, but we have to be aware that this is also what “just-so stories” do, as in Rudyard Kipling’s entertaining theory for how Indian rhinoceroses got their skin—cake crumbs made them so itchy they rubbed their skin until it became raw, stretched, and all folded up.23

Even better than simply explaining what we already know, good theories should make predictions. Newton’s theories predicted that a comet would appear around Christmastime in 1758. When this unusual sight appeared in the sky on Christmas day, the comet (named for Newton’s close friend Edmund Halley) was taken as very strong evidence for Newtonian physics. Theories such as this can become stronger the more they explain and predict further evidence.

This article appeared in Skeptic magazine 28.4

Buy print edition

Buy digital edition

Subscribe to print edition

Subscribe to digital edition

Download our app

Finally, beyond predictive theories, there are ones that can bring forth what William Whewell called consilience.24 Whewell coined the term scientist and he described consilience as what occurs when a theory that is designed to account for one type of phenomenon turns out to also account for another completely different type. The clearest example is Darwin’s theory of evolution. It accounts for biodiversity, fossil evidence, geographical population distribution, and a huge range of other mysteries that previous theories could not make sense of. And this consilience is no accident—Darwin was a student of Whewell’s and he was nervous about sharing his theory until he had made it as robust as possible.

Figure 5. The Bayesian Balance. Evidence is sorted by sieves of theories that provide retrodiction, prediction, and consilience. Better and better theories have lower rates of false positives and require a greater movement of the fulcrum to represent our increased credence. Evidence that does not yet conform to any theories at all merely contributes to an overall skepticism about the knowledge we thought we had.

Combining all of these ideas, we propose a new way (Figure 5) of sifting through the mountains of evidence the world is constantly bombarding us with. We think it is useful to consider the three different categories of theories, each dealing with different strengths of evidence, as a set of sieves by which we can first filter the data to be weighed in our minds. In this view, some types of evidence might be rather low quality, acting like a medical test with false positives near 50 percent. Such poor evidence goes equally on each side of the beam and never really moves the fulcrum. However, other evidence is much more likely to be reliable and can be counted on one side of the beam at a much higher rate than the other (although never with 100 percent certainty). And evidence that does not fit with any theory whatsoever really just ought to make us feel more skeptical about what we think we know until and unless we figure out a way to incorporate it into a new theory.

We submit that this mental model of a Bayesian Balance allows us to adjust our credences more easily and intuitively. Also, it never tips the lever all the way over into unreasonable certainty. To use it, you don’t have to delve into the history of philosophy, epistemology, skepticism, knowledge, justified true beliefs, Bayesian inferences, or difficult calculations using probability notation and unknown coefficients. You simply need to keep weighing the evidence and paying attention to which kinds of evidence are more or less likely to count. Remember that observations can sometimes be misleading, so a good guiding principle is, “Could my evidence be observed even if I’m wrong?” Doing so fosters a properly skeptical mindset. It frees us from the truth trap, yet enables us to move forward, wisely proportioning our credences as best as the evidence allows us. ![]()

About the Author

Zafir Ivanov is a writer and public speaker focusing on why we believe and why it’s best we believe as little as possible. His lifelong interests include how we form beliefs and why people seem immune to counterevidence. He collaborated with the Cognitive Immunology Research Initiative and The Evolutionary Philosophy Circle. Watch his TED talk.

Ed Gibney writes fiction and philosophy while trying to bring an evolutionary perspective to both of those pursuits. He has previously worked in the federal government trying to make it more effective and efficient. He started a Special Advisor program at the U.S. Secret Service to assist their director with this goal, and he worked in similar programs at the FBI and DHS after business school and a stint in the Peace Corps. His work can be found at evphil.com.

References

- https://rb.gy/ms7xw

- https://rb.gy/erira

- https://rb.gy/pjkay

- https://rb.gy/yyqh0

- https://rb.gy/96p2g

- https://rb.gy/f9rj3

- https://rb.gy/5sdni

- https://rb.gy/zdcqn

- https://rb.gy/3gke6

- https://rb.gy/1no1h

- https://rb.gy/eh2fl

- https://rb.gy/2k9xa

- Gillespie, M. A. (1995). Nihilism Before Nietzsche. University of Chicago Press.

- https://rb.gy/4iavf

- https://rb.gy/crv9j

- https://rb.gy/zb862

- https://rb.gy/dm5qc

- Norman, A. (2021). Mental Immunity: Infectious Ideas, Mind-Parasites, and the Search for a Better Way to Think. Harper Wave.

- https://rb.gy/2k9xa

- Jackman, S. (2009). The Foundations of Bayesian Inference. In Bayesian Analysis for the Social Sciences. John Wiley & Sons.

- Hajek, A., & Lin, H. (2017). A Tale of Two Epistemologies? Res Philosophica, 94(2), 207–232.

- Oreskes, N. (2019). Why Trust Science? Princeton University Press.

- https://rb.gy/2us27

- Whewell, W. (1847). The Philosophy of the Inductive Sciences, Founded Upon Their History. London J.W. Parker.

This article was published on April 19, 2024.