The world currently faces two energy crises: We have too little energy, and we have too much.

We have too little energy because the main problem that the bulk of humanity faces today, every day, is poverty. To provide a decent standard of living for all, humanity is going to have to generate and put to use many times more energy than it does today.

We have too much energy, because at the rate we are currently using it, we are measurably changing the Earth’s climate and chemistry, and if we keep increasing our use—which we must and will—we could change it in ways that prove catastrophic on a global scale.

Fossil Fuel and its Effects

Many have chosen to focus on the second problem, proposing to reduce the use of fossil fuels by taxing it, thereby making basic necessities less affordable. I believe that such approaches to the problem are unethical, and while people may debate their ethics, there is no debating the fact that they have not worked.

Indeed, they have failed spectacularly. Between 1990, when world leaders first mobilized to try to suppress CO2 emissions, to today, total global annual carbon use doubled from 5 billion tons to 10 billion tons. This followed a pattern of doubling our carbon use every thirty years for more than a century. In 1900, humanity burned 0.6 billion tons of carbon per year. This doubled to 1.2 billion in 1930, doubling again to 2.5 billion tons in 1960, then yet again to 5 billion tons in 1990, to 10 billion tons now.1

The reason for this increase is simple. Energy is fundamental to the production and delivery of all goods and services. If you have access to energy, and the things made by energy, you are rich. If not, you are poor. People don’t like being poor, and they will do what it takes to remedy that condition. Despite the Depression, two world wars, and all sorts of other natural and human-caused catastrophes over the recent past, people have, on the whole, been very successful at finding such remedies. In 1930, the average global GDP per capita, in today’s money, was $1500/year. Today it is about $12,000/year, an increase closely mirroring the climb in energy consumption.

This rise in energy use has enabled a miraculous uplifting of the human condition, dramatically increasing health, life expectancy, personal safety, literacy, mobility, liberty, and every other positive metric of human existence nearly everywhere. But $12,000/year is still too low. In the U.S., we average $60,000/year, and there is still plenty of poverty here. To raise the whole world to current American standards will require multiplying global energy use at least fivefold—and probably more like tenfold once population growth is taken into account.

There is every reason to expect human energy production and use to double again by 2050, and yet again by 2080. Every person of goodwill should earnestly hope for such an outcome because it implies a radical and necessary improvement in the quality of life for billions of people. That, in fact, is why it is going to happen, whether ivory tower theorists wish it or not. Humanity is not going to settle for less.

Human energy production today is overwhelmingly based on fossil fuels. Were it to remain so, while we double and redouble our energy use, the effects on the planet would become serious.

It is unfortunate that the debate over global warming has been politicized to the point where opposing partisans have chosen to either deny it or grossly exaggerate it. Neither approach is helpful. So, I’m not going to indulge in the customary hysteria and tell you that we have only 18 months or 18 years to decarbonize the economy or face doom by fires and floods, catastrophic rainfalls and droughts, evaporating poles, or glaciers advancing rapidly together with unstoppable armies of ravenous wolves, or similar biblical plagues held by some to be responsible for the Great Silence of the millions of extraterrestrial civilizations driven to their extinction by their inability to pass successfully through the Great Filter of global warming.2

Nevertheless, global warming is certainly real. According to solid measurements, average temperatures have increased by about one degree centigrade since 1870. That, admittedly, does not sound like a big deal. It is the equivalent to the warming that a New Yorker (NYC, that is) would experience if he or she moved to central New Jersey. So there is no climate catastrophe now. However, the climatic effects of continued CO2 emissions at a level an order of magnitude higher than today would be an entirely different matter.

Moreover, while climate change will still take some time before it becomes an acute matter (in reality, as opposed to agitation), other effects of the emissions of fossil power plants are already quite serious. First among these is air pollution—not CO2—but particulates, carbon monoxide, nitric oxides, and sulfur dioxide. Worldwide, these emissions are currently killing people at a rate of over 8 million per year,3 and causing many billions of dollars of increased health care costs.

Then there is the CO2 itself. While CO2-induced warming has only raised global temperatures by about one percent of the 100-degree Centigrade range inhabited by terrestrial surface life from the equator to the poles (or 0.35 percent of the 287 K average absolute temperature of the Earth’s surface) since 1870, the combustion of fossil fuels has raised the atmosphere’s CO2 content by 50 percent (from 280 ppm to 420 ppm). That sounds like a lot and it is. A 50 percent increase in CO2 represents a truly significant change in the Earth’s atmospheric chemistry, with readily observable effects.

In some respects, these effects have actually been beneficial. For example, as a result of CO2 enrichment of the atmosphere, the rate of plant growth on land has increased significantly. There is no doubt about this. NASA photographs taken from orbit show an increase in the average rate of plant growth of 15 percent worldwide since 1985.4 That’s great, but there is a problem: we have seen no comparable improvement in the oceans. Quite the contrary. Evidence is mounting that increased acidification of the ocean caused by take-up of CO2 is killing coral reefs and other important types of marine life.

There are things we can do to counteract this, for example farming the oceans, to put some of this excess CO2 to work to increase the abundance of the marine biosphere. However, there are limits to the capacity of marine and even terrestrial biomes to take advantage of increased CO2 fertilization of the atmosphere. If fossil fuel use continues to rise exponentially to support world development, this capacity will be overrun. All fertilizers—such as nitrates, or even water—become harmful when present in too great abundance. This could well become the case should our current river of CO2 emissions become a flood. It is not certain at what point the biosphere’s defenses will fail, but that is not an experiment we should wish to run.

In saying all this, I do not wish to make a case against fossil fuels. The emissions resulting from burning such fuels may be killing 8 million people every year, but the energy they produce is enriching the lives of billions. The positive transformation of human life that has been accomplished by the massive increase in power enabled by fossil fuel use is beyond reckoning, and far from abandoning it, we must—and will—take it much further.

So, the bottom line is this—we are going to need to produce a lot more energy, and it will need to be carbon-free. The only way to do that is through nuclear power. In my book, I go into great detail on how nuclear power is generated, which new technologies are coming online, and what all this will mean for the future of humanity, including space exploration. For present purposes, let me address the deepest concerns many people have about nuclear power plants. Some say they emit cancer-causing radiation, that there is no way to dispose of the waste they produce, that they are prone to catastrophic accidents, and that they could even be made to explode like nuclear bombs! These are serious charges. Let’s investigate them.

Routine Nuclear Power Plant Radiological Emissions

Americans measure radiation doses in units called rems, or, more often, millirems (abbreviated mrem), which are thousandths of a rem. While high doses of radiation delivered over short periods of time can cause radiation poisoning or cancer, there is, according to the U.S. Nuclear Regulatory Commission, “no data to establish unequivocally the occurrence of cancer following exposure to low doses and dose rates— below 10,000 mrem.”5 Despite this scientific fact, the NRC and other international regulatory authorities insist on using what is known as the “Linear No Threshold” (LNT) method for assessing risk.

According to LNT methodology, a low dose of radiation carries a proportional fraction of the risk of a larger dose. So, according to LNT theory, since a 1000 rem dose represents a 100 percent risk of a death, then a 100 mrem does should carry a 0.01 percent risk. If this were true, then one person would die for every 10,000 people exposed to 100 mrem. Since there are 330 million Americans and they already receive an average of 270 mrem per year, this would work out to 90,000 Americans dying every year from background radiation, a result with no relationship to reality. The fallacy of the LNT theory is the same as concluding that since drinking 100 glasses of wine in an hour would kill you, drinking one glass represents a one percent risk of death. It’s absurd, and the regulators know it. Let’s look at the data.

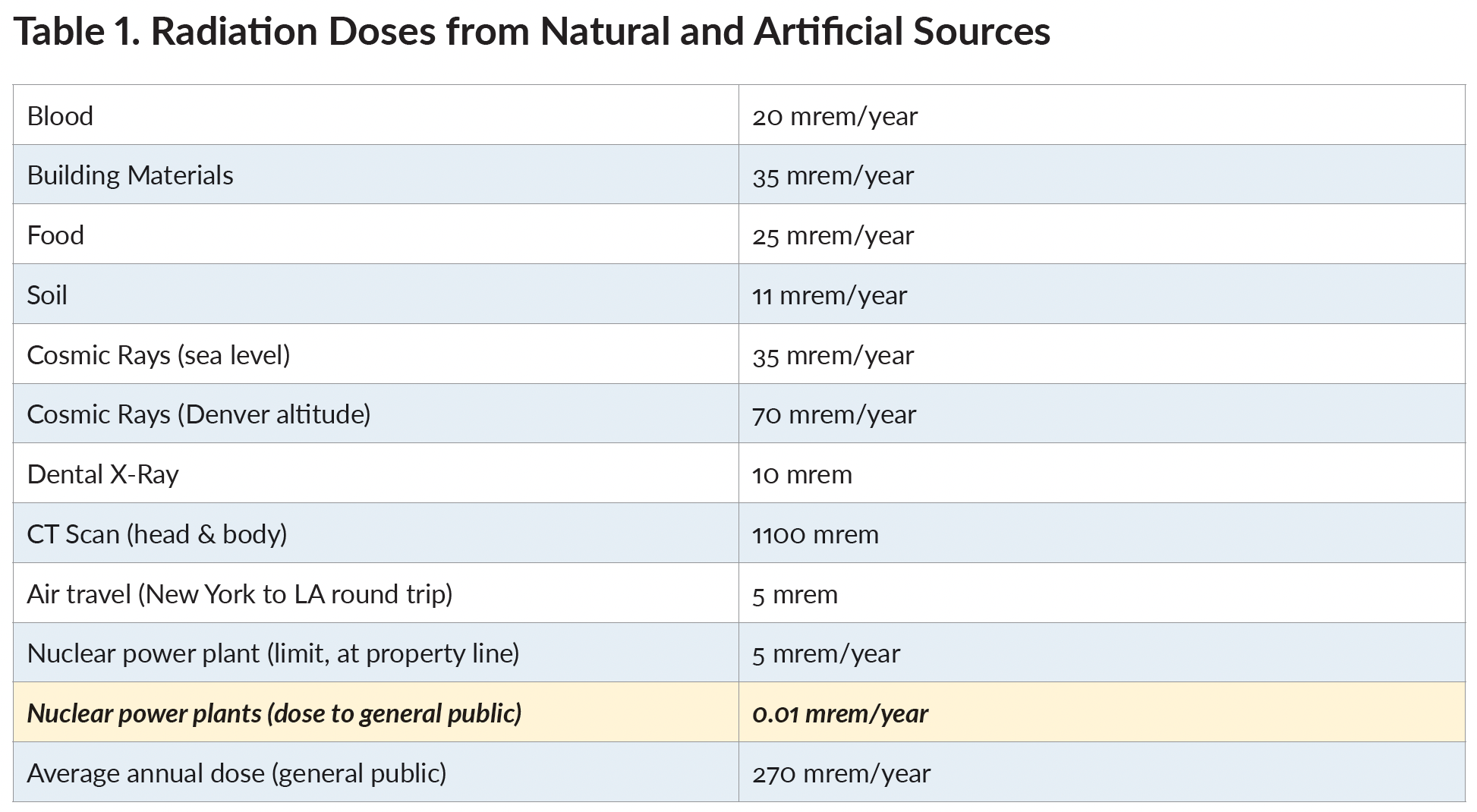

The annual radiation doses that each American can expect to receive from both natural and artificial radiation sources are given in Table 1.6

Examining Table 1, we see that the amount of radiation dose that the public receives from nuclear power plants is insignificant compared to what they receive from their own blood (which contains radioactive potassium-40), from the homes they live in, from the food they eat (watch out for bananas), from the medical care and air travel they enjoy, from the planet on which they reside, and from the universe in which the planet exists.

Nuclear Waste Disposal

One of the strangest arguments against nuclear power is the claim that there is nothing that can be done with the waste. In fact, it is the compact nature of the limited waste produced by nuclear energy that makes it uniquely attractive. A single 1000 MWe (Megawatts electric, the electricity output capability of the plant; as opposed to MWt, megawatts thermal, the input energy required) from a coal-fired power plant produces about 600 tons of highly toxic waste daily, which is more than the entire American nuclear industry produces in a year. Despite the clear, non-hypothetical consequences of this large-scale toxic pollution, no one is even talking about establishing a waste isolation facility for this material, because it is not remotely possible. In contrast to such an intractable problem, the disposal of nuclear waste is trivial.

There are two excellent places to store nuclear waste: either under the ocean bottom or under the desert. The U.S. Department of Energy has opted for the desert, but the ocean solution is much simpler and cheaper. Let’s talk about that first.

The way to dispose of nuclear waste at sea works as follows: first, you glassify the waste (that is, turn it into a glass-like form) into a water-insoluble form. Then you put it in stainless steel cans, take it out in a ship, and drop it into the mid-ocean, directly above sub-seabed sediments that have been, and will be, geologically stable for tens of millions of years. Falling down through several thousand meters of water, your canisters will reach velocities that will allow them to bury themselves deep under the mud. After that, your waste is not going anywhere, and no one will ever be able to get their hands on it.

This solution has been well-known for years.7 Unfortunately, it has been shunned by Energy Department bureaucrats who seemingly prefer a large land-based facility because that involves a much bigger budget, as well as by environmentalists who wish to prevent the problem of nuclear waste disposal from being solved. Thus, in the 1980s, the DOE looked the other way and allowed Greenpeace to pressure the London Dumping Convention into banning sub-seabed disposal of nuclear waste. That ban expires in 2025. If world leaders are in any way serious about finding an alternative to fossil fuels to meet the energy needs of modern society, they will see that the ban is not renewed.

If, however, the ban is renewed, the Department of Energy’s plan to put the waste under Yucca Mountain in the Nevada desert remains an alternative. While wildly over-priced, the plan has been exhaustively and thoroughly vetted, and it meets even the most stringent standards of public safety. The public dosage would be required to stay below 15 millirems of radiation per year for at least 10,000 years.8 The best estimates, though, show that the average public dosage would be far, far less: under 0.0001 mrem/year for 10,000 years.9

In 1972 when the Sierra Club announced its opposition to nuclear power, it identified preventing the safe disposal of nuclear waste as a key tactic to use to wreck the nuclear industry. As a result of that campaign, nuclear waste reprocessing, sub-seabed disposal, and land-based disposal have all been blocked, forcing utilities to store their radioactive waste onsite. This has added costs to the utilities operations, which have been passed on to the public both through higher rates and through higher taxes to compensate utilities for these costs, as required by law.

Chernobyl-like catastrophes would have to occur every day to approach the toll on humanity currently inflicted by coal.

Storing nuclear waste on sites near major metropolitan areas could, under worst-case scenarios—such as Fukushima—expose the public to dangers of radiological release that would be quite impossible if the waste was stored in remote areas. There is no technical obstacle to either nuclear waste processing (the French do it) or land-based disposal (the U.S. military has been storing its waste since 1999 in salt formations in the Waste Isolation Pilot Plant near Carlsbad, New Mexico.)

Nuclear Accidents

Nuclear accidents are certainly possible, but rare. Over the course of its entire history, the world’s commercial nuclear industry has had three major accidents: one at Three Mile Island in Pennsylvania in 1979; one in Fukushima, Japan, in March 2011; and the other at Chernobyl in Ukraine in 1986.

The Three Mile Island event was the only nuclear disaster in U.S. history. It is also unique in another sense—it was the only major disaster in world history in which not a single person was killed, or even injured in any way.

There were two 843 MWe Pressurized Water Reactors (PWRs) at Three Mile Island, labeled TMI-1 and TMI-2. On March 28, 1979, the date of the accident, TMI-1 was shut down, but TMI-2 was operating at full power when its turbine tripped. This shut off the secondary loop water flow to the steam generator. That, in turn, meant that nothing was taking heat away from the primary loop responsible for cooling the reactor. As a result, the control rods dropped into place, shutting down the chain reaction instantly. However, because the reactor had been operating for some time, a large inventory of highly radioactive fission products had built up in the core, and they continued to generate heat through radioactive decay at several percent the reactor-rated power after shut down. So, instead of the thermal power of the reactor dropping from 2500 MWt (the thermal power of a nuclear reactor is about three times its electrical power, because PWRs operate with an efficiency of 33 percent) to zero, it dropped instantly to 175 MWt, decreasing to 50 MWt an hour after shutdown, declining further to 20 MWt after 3 hours.

The fact that a reactor would continue to generate decay heat even after the chain reaction was shut down is (and was) well known. According to antinuclear activists, it meant that while loss of coolant would cause nuclear fission to cease, the uncooled reactor would melt itself down, with a mass of highly radioactive fission products unstoppably melting their way through the 20 cm (8 inch) thick steel pressure vessel, then through the 2.6 meter (8.6 foot) thick containment building floor, then right on down through the Earth, all the way to China. This is the source of the 1979 film title The China Syndrome, which was released just 12 days before the Three Mile Island incident, forever conflating the two in the public’s mind, further fueling hysterical fear regarding nuclear power.

At TMI-2 this theory was put to the test, because while an emergency cooling system was in place to keep cooling water flowing into the reactor under such conditions, and it turned on automatically, the confused reactor operators turned it off. As a result, the reactor did melt down.

Instead of the hot fission products melting their way through the pressure vessel, the containment building, and the Earth, all the way to China, they actually melted their way a couple of centimeters (about an inch) into the pressure vessel and stopped there. That was it. A billion-dollar reactor was lost, but the containment system was never even seriously challenged. A few Curies of radioactive Iodine 131 gas (half-life 11 days) were vented, exposing the public in the area to about 1 mrem of radiation, equivalent to the extra dose they would have received on a five-day ski trip to Colorado. The environmental impact was zero. If anyone was harmed, it was because the very antinuclear lawyers running the Nuclear Regulatory Commission decided that the accident warranted keeping the untouched TMI-1 unit shut down for the next six years, and it is estimated that the pollution emissions over that time released by the coal-fired power plants used to replace its output were probably responsible for about 300 deaths.10

The 2011 Japanese accident was much more serious. Caused by a powerful undersea earthquake and resulting tsunami that buffeted the facility with waves nearly fifty feet high, the power plant flooded, and both grid power and the onsite backup diesel generators were knocked out, eliminating the emergency core cooling system. This eventually led to a full meltdown of three of the six reactors. Nevertheless, if anything, the Fukushima event proved the safety of nuclear power. In the midst of a devastating disaster that killed some 28,000 people by drowning, falling buildings, fire, suffocation, exposure, disease, and many other causes, not a single person was killed by radiation. Nor was anyone outside the plant gate exposed to any significant radiological dose.

From the point of view of radiation release, Chernobyl was the most serious nuclear plant disaster of all time. At Chernobyl, the reactor actually had a runaway chain reaction and disassembled, breaching all containment. Approximately 50 people were killed during the event itself and the fire-fighting efforts that followed immediately thereafter. Furthermore, radioactive material comparable to that produced by an atomic bomb was released into the environment. According to a study by the International Atomic Energy Agency and World Health Organization using LNT methodology, over time, this fallout could theoretically cause up to four thousand deaths among the surrounding population.

Chernobyl was really about as bad as a nuclear accident can be. Yet, even if we accept the grossly exaggerated casualties predicted by LNT theory as being correct, in comparison to all the deaths caused every year as a result of the pollution emitted from coal-fired power plants, its impact was minor. Chernobyl-like catastrophes would have to occur every day to approach the toll on humanity currently inflicted by coal. By replacing a substantial fraction of the electricity that would otherwise have to be generated by fossil fuels, the nuclear industry has actually saved countless lives.11

Still, Chernobyl events need to be prevented, and they can be, by proper reactor engineering. First, the Chernobyl reactor had no containment building. If it had, there would have been no radiological release into the environment. Second, had the reactor been designed to lose reactivity beyond its design temperature— as all water-moderated reactors are—the runaway reaction would never have occurred at all. The key is to design the reactor in such a way that as its temperature increases, its power level will go down. In technical parlance, this is known as having a “negative temperature coefficient of reactivity.” Water is necessary for a sustained nuclear reaction in a pressurized water reactor because it serves to slow down, or “moderate,” the fast neutrons born of fission events enough for them to interact with surrounding nuclei to continue the reaction. (Like an asteroid passing by the Earth, a neutron is more likely to be pulled in to collide with a nucleus if it is going slow than if it is going fast.) It is physically impossible for such a water-moderated reactor to have a runaway chain reaction, because as soon as the reactor heats beyond a certain point, the water starts to boil. This reduces the water’s effectiveness as a moderator, and without moderation, fewer and fewer neutrons strike their target, causing the reactor’s power level to drop. The system is thus intrinsically stable, and there is no way to make it unstable. No matter how incompetent, crazy, or malicious the operators of a water-moderated reactor might be, they can’t make it go Chernobyl.

In contrast, the reactor that exploded at Chernobyl was moderated not by water, but by graphite, which does not boil. It, therefore, did not have the strong negative temperature reactivity feedback of a water-moderated system, and, because water absorbs neutrons while graphite does not, it actually had a positive temperature coefficient of reactivity, which caused power to soar once the water coolant was lost. It was thus an unstable system, vulnerable to a runaway reaction. With a huge amount of hot graphite freely exposed to the environment once the reactor was breached, fuel was available for a giant bonfire to send the whole accumulated stockpile of radioactive fission products right up into the sky. The Chernobyl reactor wasn’t simply unstable—it was flammable!

No such system could ever get permitted in the United States or other Western countries that use nuclear reactors. Those who died at Chernobyl weren’t victims of nuclear power. They were victims of the Soviet Union.

Can reactors explode like bombs?

Chernobyl was a runaway fission reaction, but it was not an atomic bomb. The strength of the explosion was enough to blow the roof off the building and break the reactor apart into burning graphite fragments, but the total explosive yield was less than that provided by a medium-sized conventional bomb. Of course, the reactor operators weren’t trying to achieve a Hiroshima. But what if they had tried?

They still could not have done it. A bomb explosion needs to be done using fast neutrons. Slow neutrons take much too long to multiply, because each generation must go through dozens of collisions to bring them down to thermal energies where the uranium nuclei have big fission cross sections. Once the chain reaction power has reached a level where the system starts to disassemble, however, time is something you don’t have. As soon as the bomb breaks apart, the chain reaction will stop. So the best you can do with slow reactions is a Chernobyl-like fizzle. To make a bomb, you need to use fast neutrons, because only they can multiply fast enough. Since PWR fuel is only three percent enriched, it can’t sustain a critical chain reaction using fast neutrons. So it just won’t work.

Nuclear Proliferation

Couldn’t the industrial infrastructure used to produce three percent enriched fuel for nuclear reactors also be used to make 90 percent enriched material for bombs?

Yes. Natural uranium contains 0.7 percent uranium-235 (235U), which is capable of fission, and 99.3 percent uranium- 238 (238U), which is not. In order to be useful in a commercial nuclear reactor, the uranium is typically enriched to a three percent concentration of 235U. The same enrichment facilities could indeed be used, with some difficulty, to further concentrate the uranium to 93 percent 235U, which would make it bomb-grade. Additionally, once the controlled reaction begins, some of the 238U will absorb neutrons, transforming it into plutonium-239 (239Pu), which is fissile. Such plutonium can be reprocessed out of the spent fuel and mixed with natural uranium to turn it into reactor-grade material. Under some circumstances, it could also be used to make bombs instead. Thus, the technical infrastructure required to support an end-to-end nuclear industry fuel cycle could also be used to make weapons.

It is also true, however, that such facilities could be used to make bomb-grade material without supporting any nuclear reactors. In fact, until Eisenhower’s Atoms for Peace policy was set forth, the AEC opposed nuclear reactors precisely because they represented a diversion of fissionable material from bomb making. If plutonium is desired, much better material for weapons purposes can be made in standalone atomic piles than can be made in commercial power stations. This is so because when Pu-239 is left in a reactor too long, it can absorb a neutron and become Pu-240, which is not fissile, and extremely difficult to separate from P-239. Further, while not fissile from reaction with neutrons, Pu-240 undergoes spontaneous fissions as a form of decay. Inserted into bomb material, it could set the bomb off prematurely. Commercial power operators don’t want to constantly be shutting down in order to remove lightly used fuel from their reactors.

In consequence, the Pu-240 content of used civilian reactor fuel builds up to about 26 percent of the Pu-239. If it’s more than 7 percent, however, it makes the plutonium useless in a bomb (and in fact the U.S. military spec requires it to be less than 1 percent.) Both the United States and the Soviet Union had thousands of atomic weapons before either had a single nuclear power plant, using either highly enriched uranium or plutonium made in special military fuel production reactors that allow constant removal of fuel. Others desirous of obtaining atomic bombs could and would proceed the same way today.12

* * *

Due to advances in biology, it is now possible to make biological weapons that are far more dangerous than nuclear devices, at many orders of magnitude lower cost. During the recent COVID-19 epidemic, there were allegations that the virus was made by altering a native strain found in bats in the biological research facility in Wuhan, China. These claims are unproven and sharply disputed. What is not disputed, however, is that it could have been made there. Think about that: a facility costing less than 1/1000th of the infrastructure needed to produce a critical mass of bomb-grade material can be used to create a virus that kills millions.

That is the reality of the modern age. Scientific knowledge has given us extraordinary powers of creation, and, therefore, destruction as well, and there is no way to un-know that knowledge. If we wish to avoid catastrophe, we need to build a world that offers plenty for everyone.

That’s why we need nuclear power.

This article appeared in Skeptic magazine 28.2

Buy print edition

Buy digital edition

Subscribe to print edition

Subscribe to digital edition

Download our app

And whether we decide to capitalize on it or not, nuclear power is coming, big time. While Americans and Europeans may think that nuclear is a declining industry, this is far from true. There are about 450 nuclear reactors in the world today. By 2050, China intends to build 450 more, domestically, and is actively vying with Russia for hundreds of more nuclear projects that will be built in the developing sector. About 60 reactors are currently under construction worldwide.

Nuclear is the energy source that will power the human future, although, unfortunately, America and Europe might not be part of it. While it may take 16 years to build an LWR in the U.S., it still takes only 4 years to build one in South Korea. So maybe the South Koreans, or the Indians, will offer some free world competition to the global nuclear renaissance. We could too, but it will take a societal change of heart to make it so. ![]()

Adapted by the author from The Case for Nukes: How We Can Beat Global Warming and Create a Free, Open, and Magnificent Future (Polaris Books, 2023). Copyright © 2023 by Robert Zubrin. Reprinted with permission.

About the Author

Robert Zubrin is a nuclear and aerospace engineer who has worked in areas of radiation protection, nuclear power plant safety, and thermonuclear fusion research. Since 1996, he has been President of Pioneer Astronautics, an aerospace research and development company, where he led over 70 highly successful technology development projects for NASA, the U.S. military, and the Department of Energy. He is the author of 13 books, over 200 technical and non-technical papers in areas relating to aerospace and energy engineering, and is the inventor of over 20 U.S. patents.

References

- https://bit.ly/3GrDJGe

- Frank, A. (2018). Light of the Stars: Alien Worlds and the Fate of the Earth. W.W. Norton.

- https://bit.ly/406plKV See also Beckmann, P. (1976) The Health Hazards of Not Going Nuclear. Golem Press and Cravens, G. (2007). Power to Save the World: The Truth About Nuclear Energy. Vintage Books.

- https://go.nasa.gov/3KHCoO8

- https://bit.ly/3o0AjUy. See also, National Council on Radiation Protection and Measurements (1997). Uncertainties in Fatal Cancer Risk Estimates Used in Radiation Protection. (NCRP Report, no. 126).

- https://bit.ly/43d5Xyq, see also https://bit.ly/439LY3L. The Environmental Protection Agency offers a calculator that lets you estimate your annual radiation dosage: https://bit.ly/2wvj3Zw

- https://bit.ly/3MnjFZt

- https://bit.ly/3nXNMfP

- https://bit.ly/43d6PmK

- https://bit.ly/439M4s9

- This is because commercial reactors keep their fuel in place for a long time, during which some of the 239Pu created in the reactor absorbs a further neutron to become 240Pu. The 240Pu seriously degrades the value of the plutonium for weapons purposes. However, in standalone atomic piles, such as those developed at the Hanford Site during the Manhattan Project, the fuel is not left in the system for long, so the plutonium produced is not spoiled. In the case of thorium reactors, which breed 232Th to 233U, the use of reactor fuel for bomb-making becomes even more difficult, making such systems ideal for use in situations where proliferation is of concern.

- https://go.nature.com/418RqSp

This article was published on August 25, 2023.