“It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.” —Attributed to Mark Twain

When it comes to opinions concerning standardized tests, it seems that most people know for sure that tests are simply terrible. In fact, a recent article published by the National Education Association (NEA) began by saying, “Most of us know that standardized tests are inaccurate, inequitable, and often ineffective at gauging what students actually know.”1 But do they really know that standardized tests are all these bad things? What does the hard evidence suggest? In the same article, the author quoted a first-grade teacher who advocated teaching to each student’s particular learning style—another ill-conceived educational fad2 that, unfortunately, draws as much praise as standardized tests draw damnation.

Indeed, a typical post in even the most prestigious of news outlets3, 4 will make several negative claims about standardized admission tests. In this article, we describe each of those claims and then review what mainstream scientific research has to say about them.

Claim 1: Admission tests are biased against historically disadvantaged racial/ethnic groups.

Response: There are racial/ethnic average group differences in admission test scores, but those differences do not qualify as evidence that the tests are biased.

The claim that admission tests are biased against certain groups is an unwarranted inference based on differences in average test performance among groups.

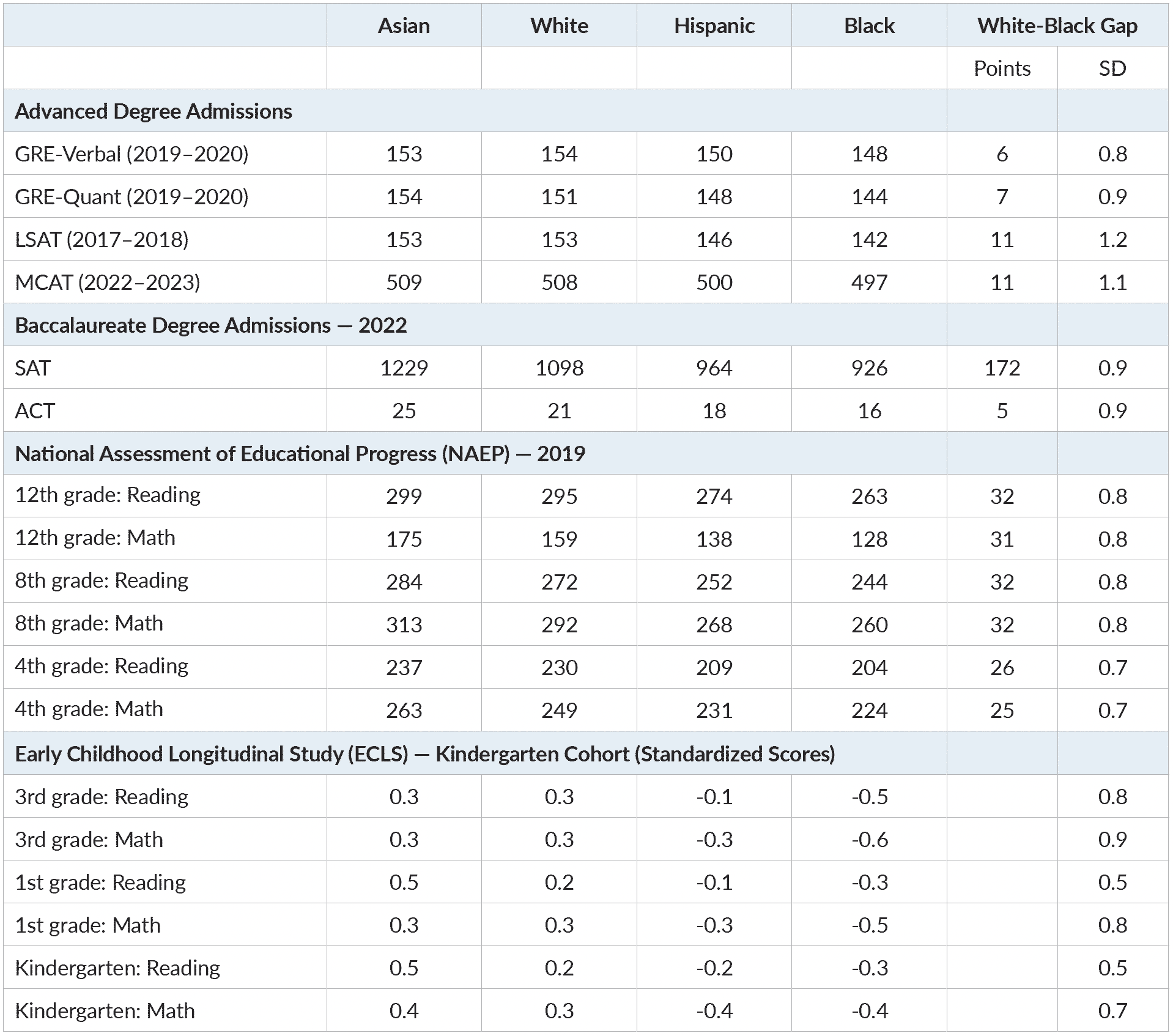

SD=Standard deviation. GRE scores range from 130 to 170; data pulled from the Educational Testing Service. Available at https://rb.gy/iz5w8. (Here, “Hispanic” represents the largest group in the test-taker data, “other Hispanic.”) LSAT scores range from 120 to 180; data pulled from Lauth & Sweeney (2022), LSAT performance with regional, gender, and racial and ethnic breakdowns: 2011–2012 through 2017–2018 testing years. Available at https://rb.gy/gkj3z. MCAT (total) scores range from 472–528; data pulled from the Association of American Medical Colleges. Available at https://rb.gy/jegmr. SAT (total) scores range from 400 to 1600; in 2022 the overall SD was around 200. Data pulled from the SAT suite of assessments annual report, available at https://rb.gy/7a7ms. ACT (composite) scores range from 1 to 36, with a standard deviation around 5 to 5.5. Data pulled from their annual report: https://rb.gy/k7kf5. NAEP scores range from 0 to 500 for Reading, and 0 to 500 for Math in 4th and 8th grades and 0 to 300 for Math in 12th grade. Data were pulled from National Report Cards: nationsreportcard.gov. Standard deviations found in various tables (nces.ed.gov) for previous years ranged from 33 to 42, so standardized differences were estimated using a standard deviation of 38. ECLS scores are pulled from Fryer and Levitt (2006), where they are reported as standardized scores under a full sample mean of 0.0 and standard deviation of 1.0.

The differences themselves are not in question. They have persisted for decades despite substantial efforts to ameliorate them.5 As shown in the table above and reviewed more comprehensively elsewhere,6, 7 average group differences appear on just about any test of cognitive performance—even those administered before kindergarten. Gaps in admission test performance among racial groups mirror other achievement gaps (e.g., high school GPA) that also manifest well before high school graduation. (Note: these group differences are differences between the averages— technically, the means—for the respective groups. The full range of scores is found within all the groups, and there is significant overlap between groups.)

Group differences in admission test scores do not mean that the tests are biased. An observed difference does not provide an explanation of the difference, and to presume that a group difference is due to a biased test is to presume an explanation of the difference. As noted recently by scientists Jerry Coyne and Luana Maroja, the existence of group differences on standardized tests is well known; what is not well understood is what causes the disparities: “genetic differences, societal issues such as poverty, past and present racism, cultural differences, poor access to educational opportunities, the interaction between genes and social environments, or a combination of the above.”8 Test bias, then, is just one of many potential factors that could be responsible for group disparities in performance on admission tests. As we will see in addressing Claim 2, psychometricians have a clear empirical method for confirming or disconfirming the existence of test bias and they have failed to find any evidence for its existence. (Psychometrics is that division of psychology concerned with the theory and technique of measurement of cognitive abilities and personality traits.)

Claim 2: Standardized tests do not predict academic outcomes.

Response: Standardized tests do predict academic outcomes, including academic performance and degree completion, and they predict with similar accuracy for all racial/ethnic groups.

The purpose of standardized admission tests is simple: to predict applicants’ future academic performance. Any metric that fails to predict is rendered useless for making admission decisions. The Scholastic Assessment Test (now, simply called the SAT) has predictive validity if it predicts outcomes such as college grade point average (GPA), whether the student returns for the second year (retention), and degree completion. Likewise, the Graduate Record Examination (GRE) has predictive validity if it predicts outcomes such as graduate school GPA, degree completion, and the important real world measure of publications. In practice, predictive validity, for example between SAT scores and college GPA, implies that if you pull two SAT-takers at random off the street, the one who earned a higher score on the SAT is more likely to earn a higher GPA in college (and is less likely to drop out). The predictive utility of standardized tests is solid and well established. In the same way that blood pressure is an important but not perfect predictor of stroke, cognitive test scores are an important but not perfect predictor of academic outcomes. For example, the correlation between SAT scores and college GPA is around .5,9, 10, 11 the correlations between GRE scores and various measures of graduate school performance range between .3 and .4,12 and the correlation between Medical College Admission Test (MCAT) scores and licensing exam scores during medical school is greater than .6.13 Using aggregate rather than individual test scores yields even higher correlations that predict a college’s graduation rate given the ACT/SAT score of its incoming students. Based on 2019 data, the correlations between six-year graduation rate and a college’s 25th percentile ACT or SAT score are between .87 and .90.14

Research confirming the predictive validity of standardized tests is robust and provides a stark contrast to popular claims to the contrary.13, 15, 16 The latter are not based on the results of meta-analyses12, 17 nor on studies conducted by psychometricians.10, 18, 19, 20, 21 Rather, those claims are based on cherry-picked studies that rely on select samples of students who have already been admitted to highly selective programs—partially because of their high test scores—and who therefore have a severely restricted range of test scores. For example, one often-mentioned study22 investigated whether admitted students’ GRE scores predicted PhD completion in STEM programs and found that students with higher scores were not more likely to complete their degree. In another study of students in biomedical graduate programs at Vanderbilt,23 links between GRE scores and academic outcomes were trivial. However, because the samples of students in both studies had a restricted range of GRE scores—all scored well above average24—the results are essentially uninterpretable. This situation is analogous to predicting U.S. men’s likelihood of playing college basketball based on their height, but only including in the sample men who are well above average. If we want to establish the link between men’s height and playing college ball, it is more appropriate to begin with a sample of men who range from 5’1″ (well below the mean) to 6’7″ (well above the mean) than to begin with a restricted sample of men who are all at least 6’4″ (two standard deviations above the mean). In the latter context, what best differentiates those who play college ball versus not is unlikely to be their height—not when they are all quite tall to begin with.

Given these demonstrated facts about predictive validity, let’s return to the first claim, that admission tests are biased against certain groups. This claim can be evaluated by comparing the predictive validities for each racial or ethnic group. As noted previously, the purpose of standardized admission tests is to predict applicants’ future academic performance. If the tests serve that purpose similarly for all groups, then, by definition, they are not biased. And this is exactly what scientific studies find, time and time again. For example, the SAT is a strong predictor of first year college performance and retention to the second year, and to the same degree (that is, they predict with essentially equal accuracy) for students of varying racial and ethnic groups.10, 25 Thus, regardless of whether individuals are Black, Hispanic, White, or Asian, if they score higher on the SAT, they have a higher probability of doing well in college. Likewise, individuals who score higher on the GRE tend to have higher graduate school GPAs and a higher likelihood of eventual degree attainment; and these correlations manifest similarly across racial/ethnic groups, males and females, academic departments and disciplines, and master’s as well as doctoral programs.12, 20, 26, 27 When differential prediction does occur, it is usually in the direction of slightly overpredicting Black students’ performance (such that Black students perform at a somewhat lower level in college than would be expected based on their test scores).

Claim 3: Standardized tests are just indicators of wealth or access to test preparation courses.

Response: Standardized tests were designed to detect (sometimes untapped) academic potential, which is very useful; and controlling for wealth and privilege does not detract from their utility.

Some who are critical of standardized tests say that their very existence is racist. That argument is not borne out by the history and expansion of the SAT. One of the long-standing purposes of the SAT has been to lessen the use of legacy admissions (set-asides for the progeny of wealthy donors to the college or university) and thereby to draw college students from more walks of life than elite high schools of the East Coast.28 Standardized tests have a long history of spotting “diamonds in the rough”—underprivileged youths of any race or ethnic group whose potential has gone unnoticed or who have under-performed in high school (for any number of potential reasons, including intellectual boredom). Notably, comparisons of Black and White students with similar 12th grade test scores show that Black students are more likely than White students to complete college.6 And although most of us think of the SAT and comparable American College Test (ACT) as tests taken by high school juniors and seniors, these tests have a very successful history of identifying intellectual potential among middle-schoolers29 and predicting their subsequent educational and career accomplishments.30

Students of higher socioeconomic status (SES) do tend to score higher on the SAT and fare somewhat better in college.31 However, this link is not nearly as strong as many people, especially critics of standardized tests, tend to assume—17 percent of the top 10 percent of ACT and SAT scores come from students whose family incomes fall in the bottom 25 percent of the distribution.32 Further, if admission tests were mere “wealth” tests, the association between students’ standardized test scores and performance in college would be negligible once students’ SES is accounted for statistically. Instead, the association between SAT scores and college grades (estimated at .47) is essentially unchanged (moving only to .44) after statistically controlling for SES.31, 33

A related common criticism of standardized tests is that higher SES students have better access to special test preparation programs and specific coaching services that advertise their potential to raise students’ test scores. The findings from systematic research, however, are clear: the effects of test preparation programs, including semester-long, weekly, in-person structured sessions with homework assignments,34 demonstrate limited gains, and this is the case for the ACT, SAT, GRE, and LSAT.35, 36, 37, 38 Average gains are small—approximately one-tenth to one-fifth of a standard deviation. Moreover, free test preparation materials are readily available at libraries and online; and for tests such as the SAT and ACT, many high schools now provide, and often require, free in-class test preparation sessions during the year leading up to the test.

Claim 4: Admission decisions are fairer without standardized tests.

Response: The admissions process will be less useful, and more unfair, if standardized tests are not used.

According to the fairtest.org website, in 2019, before the pandemic, just over 1,000 colleges were test-optional. Today, there are over 1,800. In 2022–2023, only 43 percent of applicants submitted ACT/SAT scores, compared to 75 percent in 2019–2020.39 Currently, there are over 80 colleges that do not consider ACT/SAT scores in the admissions process even if an applicant submits them. These colleges are using a test-free or test-blind admissions policy. The same trend is occurring for the use of the GRE among graduate programs.4

The movement away from admission tests began before the COVID-19 pandemic but was accelerated by it, and there are multiple reasons why so many colleges and universities are remaining test-optional or test-free. First, very small colleges (and programs) have taken enrollment hits and suffered financially. By eliminating the tests, they hope to attract more applicants and, hopefully, enroll more students. Once a few schools go test-optional or test-free, other schools feel they have to as well in order to be competitive in attracting applicants. Second, larger, less-selective schools (and programs) can similarly benefit from relaxed admission standards by enrolling more students, which, in turn, benefits their bottom line. Both types of schools also increase their percentages of minority student enrollment. It looks good to their constituents that they are enrolling young people from historically underrepresented groups and giving them a chance at success in later life. Highly selective schools also want a diverse student body but, similar to the previously mentioned schools, will not see much of a change in minority graduation rates simply by lowering admission standards if they also maintain their classroom academic standards. They will get more applicants, but they are still limited by the number of students they can serve. Rejection rates increase (due to more applicants) and other metrics become more important in identifying which students can succeed in a highly competitive academic environment.

There are multiple concerns with not including admission tests as a metric to identify students’ potential for succeeding in college and advanced degree programs, particularly those programs that are highly competitive. First, the admissions process will be less useful. Other metrics, with the exception of high school GPA as a solid predictor of first-year grades in college, have lower predictive validity than tests such as the SAT. For example, letters of recommendation are generally considered nearly as important as test scores and prior grades, yet letters of recommendation are infamously unreliable—there is more agreement between two letters about two different applicants from the same letter-writer than there is between two letters about the same applicant from two different letter-writers.40 (Tip to applicants—make sure you ask the right person to write your recommendation). Moreover, letters of recommendation are weak predictors of subsequent performance. The validity of letters of recommendation as a predictor of college GPA hovers around .3; and although letters of recommendation are ubiquitous in applications for entry to advanced degree programs, their predictive validity in that context is even weaker.41 More importantly, White and Asian students typically get more positive letters of recommendation than students from underrepresented groups.42 For colleges that want a more diverse student body, placing more emphasis on such admission metrics that also reveal race differences will not help.

This brings us to our second concern. Because race differences exist in most metrics that admission officers would consider, getting rid of admission test scores will not solve any problems. For example, race differences in performance on Advanced Placement (AP) course exams, now used as an indicator of college readiness, are substantial. In 2017, just 30 percent of Black students’ AP exams earned a qualifying score compared to more than 60 percent of Asian and White students’ exams.43 Similar disparities exist for high school GPA; in 2009, Black students averaged 2.69, whereas White students averaged 3.09,44 even with grade inflation across U.S. high schools.45, 46 Finally, as mentioned previously, race differences even exist in the very subjective letters of recommendation submitted for college admission.47

Without the capacity to rely on a standard, objective metric such as an admission test score, some admissions committee members may rely on subjective factors, which will only exacerbate any disparate representation of students who come from lower-income families or historically underrepresented racial and ethnic groups. For example, in the absence of standardized test scores, admissions committee members may give more attention to the name and reputation of students’ high school, or, in the case of graduate admissions, the name recognition of their undergraduate research mentor and university. Admissions committees for advanced degree programs may be forced to pay greater attention to students’ research experience and personal statements, which are unfortunately susceptible to a variety of issues, not the least being that students of high socioeconomic backgrounds may have more time to invest in gaining research experience, as well as the resources to pay for “assistance” in preparing a well-written and edited personal statement.48

So why continue to shoot the messenger?

If scientists were to find that a medical condition is more common in one group than in another, they would not automatically presume the diagnostic test is invalid or biased. As one example, during the pandemic, COVID-19 infection rates were higher among Black and Hispanic Americans compared to White and Asian Americans. Scientists did not shoot the messenger or engage in ad hominem attacks by claiming that the very existence of COVID tests or support for their continued use is racist.

This article appeared in Skeptic magazine 28.3

Buy print edition

Buy digital edition

Subscribe to print edition

Subscribe to digital edition

Download our app

Sadly, however, that is not the case with standardized tests of college or graduate readiness, which have been attacked for decades,49 arguably because they reflect an inconvenient, uncomfortable, and persistent truth in our society: There are group differences in test performance, and because the tests predict important life outcomes, the group differences in test scores forecast group differences in those life outcomes.

The attack on testing is likely rooted in a well-intentioned concern that the social consequences of test use are inconsistent with our social values of equality.50 That is, there is a repeated and illogical rejection of what “is” in favor of what educators feel “ought” to be.51 However, as we have seen in addressing misconceptions about admission tests, removing tests from the process is not going to address existing inequities; if anything, it promises to exacerbate them by denying the existence of actual performance gaps. If we are going to move forward on a path that promises to address current inequities, we can best do so by assessing as accurately as possible each individual to provide opportunities and interventions that coincide with that individual’s unique constellation of abilities, skills, and preferences.52, 53 ![]()

About the Authors

April Bleske-Rechek is a professor of psychology at the University of Wisconsin-Eau Claire (UWEC), where she directs the Individual Differences and Evolutionary Psychology lab. Her work has been published in a variety of peer-reviewed journals, including Intelligence, Evolutionary Behavioral Sciences, and Personality and Individual Differences; she is also an active member of the Foundation for Individual Rights and Expression (FIRE) faculty network. Dr. Bleske-Rechek has received several teaching and mentoring awards, most recently the Council of Undergraduate Research (CUR) Mid-Career Mentoring Award (2020) and the UWEC College of Arts & Sciences Career Excellence in Teaching Award (2021).

Daniel H. Robinson is Associate Dean of Research and the K-16 Mind, Brain, and Education Endowed Chair in the College of Education at the University of Texas at Arlington. He has served as editor of Educational Psychology Review (2006–2015), as associate editor of the Journal of Educational Psychology (2014–2020), as an editorial board member of nine journals, and currently as editor of Monographs in the Psychology of Education: Child Behavior, Cognition, Development, and Learning, Springer Publishing. Dr. Robinson was a Fulbright Specialist Scholar at Victoria University, Wellington, New Zealand.

References

- https://rb.gy/v89cw

- https://rb.gy/fayim

- https://rb.gy/o5d3n

- Langin, (2019). PhD Programs Drop Standardized Exam. Science Magazine, 364(6443), 816.

- https://rb.gy/k368u

- Jencks, C., & Phillips, M. (1998). The Black-White Test Score Gap. Brookings Institution Press.

- Jensen, A. (1998). The G Factor: The Science of Mental Ability. Praeger.

- https://rb.gy/i6b25

- Berry, C.M., & Sackett, P. R. (2009). Individual Differences in Course Choice Result in Underestimation of the Validity of College Admissions Systems. Psychological Science, 20(7), 822–830.

- https://rb.gy/xt8kc

- https://rb.gy/edub9

- Kuncel, N.R., Hezlett, S.A., & Ones, D.S. (2001). A Comprehensive Meta-Analysis of the Predictive Validity of the Graduate Record Examinations: Implications for Graduate Student Selection and Performance. Psychological Bulletin, 127(1), 162–181.

- Kuncel, N.R., & Hezlett, S.A. (2010). Fact and Fiction in Cognitive Ability Testing for Admissions and Hiring Decisions. Current Directions in Psychological Science, 19(6), 339–345.

- https://rb.gy/qr0wq

- Kuncel, N.R., Ones, D.S., & Sackett, P.R. (2010). Individual Differences as Predictors of Work, Educational, and Broad Life Outcomes. Personality and Individual Differences, 49, 331–336.

- Sackett, P.R., Borneman, M.J., & Connelly, B.S. (2008). High-Stakes Testing in Higher Education and Employment: Appraising the Evidence for Validity and Fairness. American Psychologist, 63(4), 215–227.

- Kuncel, N.R., & Hezlett, S.A. (2007). Standardized Tests Predict Graduate Students’ Success. Science, 315, 1080–1081.

- Bridgeman, B., Burton, N., & Cline, F. (2008). Understanding What the Numbers Mean: A Straightforward Approach to GRE Predictive Validity. ETS RR-08-46.

- https://rb.gy/iknta

- Klieger, D.M., Cline, F.A., Holtzman, S.L., Minsky, J.L., & Lorenz, F. (2014). New Perspectives on the Validity of the GRE General Test for Predicting Graduate School Grades. ETS Research Report No. RR-14-26.

- https://rb.gy/et4ja

- https://rb.gy/22u4u

- https://rb.gy/jz1tb

- https://rb.gy/tc819

- Mattern, K.D., Patterson, B.F., Shaw, E.J., Kobrin, J.L., & Barbuti, S.M. (2008). Differential Validity and Prediction of the SAT. College Board Research Report No. 2008-4.

- Burton, N.W., & Wang, M. (2005). Predicting Long-Term Success in Graduate School: A Collaborative Validity Study. GRE Board Report No. 99-14R; ETS RR-05-03.

- Kuncel, N.R., Wee, S., Serafin, L., & Hezlett, S.A. (2010). The Validity of the Graduate Record Examination for Master’s and Doctoral Programs: A Meta-Analytic Investigation. Educational and Psychological Measurement, 70(2), 340–352.

- Lemann, N. (2004). A History of Admissions Testing. In Rebecca Zwick (ed.), Rethinking the SAT: The Future of Standardized Testing in University Admissions (pp. 5–14). RoutledgeFalmer.

- Stanley, J.C. (1985). Finding Intellectually Talented Youth and Helping Them Educationally. The Journal of Special Education, 19(3), 363–372.

- Lubinski, D. (2009). Exceptional Cognitive Ability: The Phenotype. Behavior Genetics, 39, 350–358.

- Sackett, P.R., Kuncel, N.R., Beatty, A.S., Rigdon, J.L., Shen, W., & Kiger, T.B. (2012). The Role of Socioeconomic Status in SAT-Grade Relationships and in College Admissions Decisions. Psychological Science, 23(9), 1000–1007.

- Hoxby, C., & Avery, C. (2013). The Missing “One-Offs”: The Hidden Supply of High-Achieving, Low-Income Students. Brookings Papers on Economic Activity.

- Sackett, P.R., Kuncel, N.R., Arneson, J.J., Cooper, S.R., & Waters, S.D. (2009). Does Socioeconomic Status Explain the Relationship Between Admissions Tests and Post-Secondary Academic Performance? Psychological Bulletin, 135(1), 1–22.

- https://rb.gy/gezki

- Becker, B.J. (1990). Coaching for the Scholastic Aptitude Test: Further Synthesis and Appraisal. Review of Educational Research, 60, 373–417.

- Powers, D.E. (1985). Effects of Coaching on GRE Aptitude Test Scores. Journal of Educational Measurement, 22(2), 121–136.

- Powers, D.E., & Rock, D.A. (1999). Effects of Coaching on SAT I: Reasoning Test Scores. Journal of Educational Measurement, 36(2), 93–118.

- Zwick, R. (2002). Is the SAT a ‘Wealth Test’? Phi Delta Kappan, 84(4), 307–311.

- https://rb.gy/i0oet

- Baxter, J.C., Brock, B., & Rozelle, R M. (1981). Letters of Recommendation: A Question of Value. Journal of Applied Psychology, 66(3), 296–301.

- https://rb.gy/bqiao

- https://rb.gy/mdv1g

- Finn, C.E., & Scanlan, A.E. (2019). Learning in the Fast Lane: The Past, Present, and Future of Advanced Placement. Princeton University Press.

- U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics. (2009). The Nation’s Report Card. (NCES 2011- 462). Washington, DC: U.S. Government Printing Office.

- https://rb.gy/3z8bd

- https://rb.gy/x1zg1

- Akos, P., & Kretchmar, J. (2016–2017). Gender and Ethnic Bias in Letters of Recommendation: Considerations for School Counselors. Professional School Counseling, 20(1), 102–113.

- https://rb.gy/v0w2u

- Green, B.F. (1978). In Defense of Measurement. American Psychologist, 33(7), 664–670.

- Lees-Haley, P.R. (1996). Alice in Validityland, or the Dangerous Consequences of Consequential Validity. American Psychologist, 51(9), 981–983.

- Davis, B. B. (1978). The Moralistic Fallacy. Nature, 272, 390.

- Lubinski, D. (2020). Understanding Educational, Occupational, and Creative Outcomes Requires Assessing Intraindividual Differences in Abilities and Interests. Proceedings of the National Academy of Sciences, 117(29), 16720–16722.

- https://rb.gy/9or3o

This article was published on July 17, 2023.